PARROT Node Deployment (Testnet)

Computing Node Operating Environment

Operating System Requirements: Linux, recommended Ubuntu 20.4

Memory Requirements: 64G or above

GPU Memory Requirements: 12G or above

Storage Requirements: 300G or above

Node Docker Download Addresses:

- parrotnode/openai_api_gpunode:latest: Local model node compatible with OpenAI API (requires GPU)

- parrotnode/openai_api_cpunode:latest: Third-party API interface node (requires only CPU)

- parrotnode/model_cp_node:latest: Huggingface model node (requires GPU)

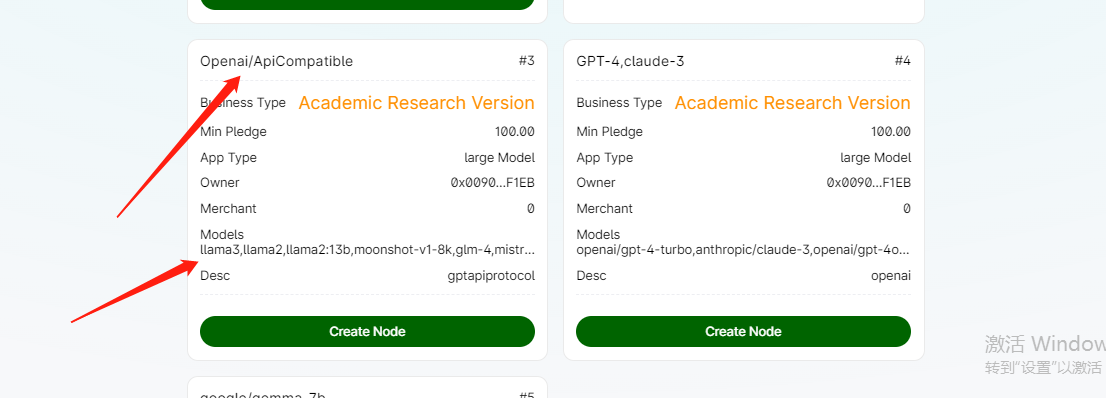

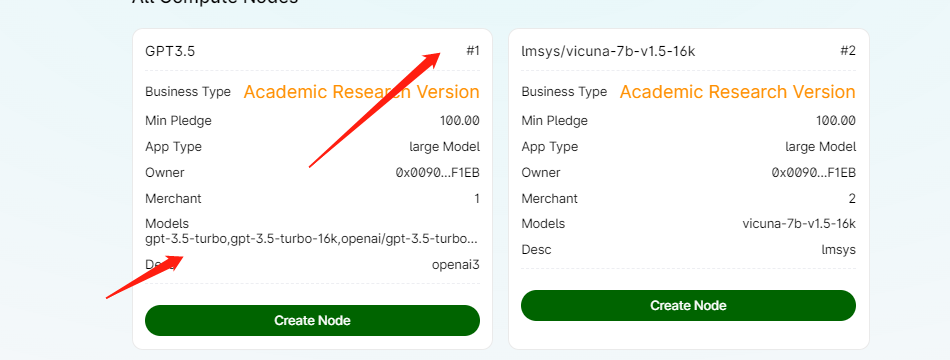

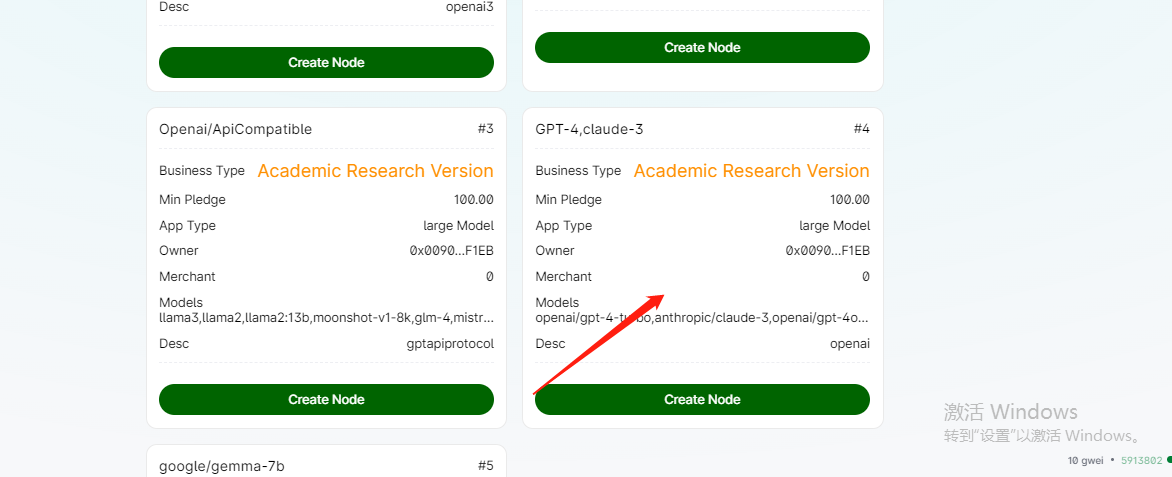

Purchasing and Staking a Node

https://parrotdapp.vercel.app/#/nodes

Starting a Computing Node

GPU Driver Installation Resources:

Cloud Host GPU Driver Installation https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html Configuring Docker to Support GPU: https://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html#network-repo-installation-for-ubuntu GPU Driver Installation: https://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html#ubuntu

1. Local Model Node Compatible with OpenAI API (Stake Corresponding Node Type First)

If you choose to run this type of node, please stake node #3. Supported models can be found in the models list.

1. Running the Node

docker run --gpus all -p [external_port]:31003 --name openai_api_gpunode1 parrotnode/openai_api_gpunode:latest ./openai_api_node --net=[network] --prtmodel --host 0.0.0.0 --port [port] --node-key [node_key] --api-keys sk-parrottest --model-names [model_names] --worker-address [external_address]

[external_address]: This is the address accessible from the outside. If your machine has a fixed external IP address, simply enter that address and port. If the node server does not have a fixed IP address, you can set a fixed IP on the gateway or router and then perform port mapping. If neither the server's gateway nor the router has a fixed IP address, you can use third-party DDNS (Dynamic Domain Name System) services to set up a dynamic domain name. It is recommended to prioritize methods with fixed IP and port access. The first run may be slow as it needs to download network data. If there are network errors, you may need to access an international network.

Example command to deploy models gemma:7b, phi3:

docker run --gpus all -p 51003:31003 --name openai_api_gpunode1 parrotnode/openai_api_gpunode:latest ./openai_api_node --net=test --prtmodel --node-key 7a21f93ef3aa9100818fc727314e4556566566556665565656560 --api-keys sk-parrottest --model-names gemma:7b,phi3 --worker-address http://202.31.4.11:91003

Note that the worker-address port and IP (172.31.4.211:51003) must be changed to the external access port and IP of this node. Mapping rules are as follows: 202.31.4.11:91003 -> local IP (192.168.1.10): 51003 (local port) With a fixed external IP, directly configure the fixed external IP. In the external router, configure the corresponding rules. If there is no fixed external IP, you can also configure a dynamic domain name and port.

1.1 Supported Model List:

llama3, llama2, llama2:13bmistral, gemma:2b, gemma:7b, mixtral, mixtral:8x7b, mixtral:8x22b, mistral-openorca,

dolphin-mixtral, qwen, qwen:1.8b, qwen:4b, qwen:7b, qwen:14b, qwen:72b, llava, llava:7b,

llava:13b, llava:34b, deepseek-coder, deepseek-coder:33b, llama2-uncensored:7b, llama2-uncensored:70b,

codellama, codellama:13b, codellama:34b, phi, nous-hermes2, nous-hermes2:10.7b, nous-hermes2:34b,

orca-mini, orca-mini:3b, orca-mini:7b, orca-mini:13b, wizard-vicuna-uncensored, nomic-embed-text,

vicuna, llama2-chinese, tinydolphin, zephyr, openhermes, tinyllama, openchat, deepseek-coder:6.7b, wizardcoder

1.2 Highly Recommended Deployment: mixtral:8x7b (requires GPU: 30G or above), mixtral:8x22b (requires GPU: 80G or above)

2. Stopping the Container:

Press Ctrl+C to stop the container.

3. Restarting the Container:

docker start openai_api_gpunode1

4. Downloading Model Files:

docker exec openai_api_gpunode1 prtmodel pull gemma:7b

docker exec openai_api_gpunode1 prtmodel pull phi3

View downloaded models:

docker exec openai_api_gpunode1 prtmodel list

5. Testing:

docker exec openai_api_gpunode1 curl http://localhost:11434/v1/chat/completions -d '{

"model": "gemma:7b,phi3",

"stream": true,

"messages":[{

"role": "user",

"content": "Hello"

}]

}'

2. Third-party API Interface Node (Stake Corresponding Node Type First)

To run this type of node, you need to stake one of the following node types: #1, #4, etc.

1. Running the Node

docker run -p [external_port]:31003 --name openai_api_cpunode1 parrotnode/openai_api_cpunode:latest ./openai_api_node --net=[network] --host 0.0.0.0 --port [port] --node-key [node_key] --api-keys [api_key] --model-names [model_names] --worker-address [external_address] --api-base [third_party_api_address if openai, leave blank]

Parameter example:

--net=test --prtmodel --host 0.0.0.0 --port 31003 --worker-address http://172.31.37.54:41003 --api-base https://api.moonshot.cn/v1/ --model-names moonshot-v1-8k --logfile kimi --node-key 7a21f93ef3e1d49e831e8d88dbaac0e88e60563df983aa9100818fc727314e40 --api-keys sk-b9Yp47PGuACxCIfHlis6IHofrkhc4ouYcMBflJYEubcnE2

Example (OpenAPI Interface):

docker run -p 41003:31003 --name openai_api_cpunode1 parrotnode/openai_api_cpunode:latest ./openai_api_node --net=test --host 0.0.0.0 --port 31003 --node-key 430869d81fc9701c64e21a8218dddfa6500025a4455656566 --api-keys sk-cse445553ZWTkWC3jnAT3BlbkFJhSwrNoJa0R8upJjpcGxI3ZWTkWC3jnAT3BlbkFJhSwrNoJa0R8upJjpcGxI --api-base https://api.openai.com/v1/ --worker-address http://172.31.4.221:41003

2. Stopping the Container:

Press Ctrl+C to stop the container.

3. Restarting the Container:

docker start openai_api_cpunode1